News Story

Zhang Receives NSF CAREER Award

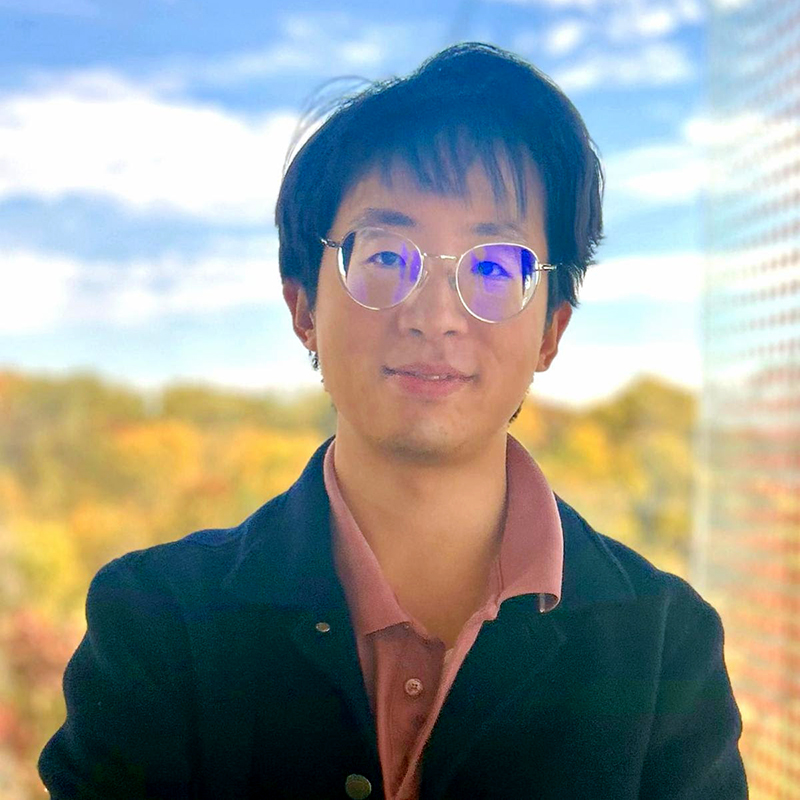

Professor Kaiqing Zhang has been named a 2025 NSF CAREER Award recipient for his proposal on the Theoretical Foundations of Multi-agent Learning in Dynamic Environments. He will be awarded $538,000 over five years to support his research. The NSF CAREER Award is a highly competitive and prestigious award given to early-career faculty who have contributed to the mission of their department and serve as academic role models in research and education.

The recent past has shown successes with autonomous agents in decision-making and learning in dynamic environments, with reinforcement learning (RL) being a prominent example. Success stories of this include AlphaGo, Autonomous Driving, Robot Learning, Power Grid Control, and more recently, the post-training of Large-Language Models as ChatGPT. Most of these scenarios by nature involve “multiple agents” interacting with each other. More importantly, these multiple agents usually operate under “Information Constraints”: they only have access to local and decentralized information that differ across agents, with delays/noisy measurements, and without knowing the underlying state of the environment. “As we knew in the story of ‘Blind men and an elephant’”, said by Zhang, “where only one part of the elephant is felt by each blind man, making it challenging to guess what an elephant really is.” This becomes even more challenging when the agents have conflict/misaligned objectives, are strategic, and do not have accurate knowledge of the world.

Despite the practical relevance, theoretical foundations of dynamic learning with such information constraints are far from being well-developed, especially in the RL/Machine Learning literature. On the other hand, rich literature in Control Theory has investigated the fundamental limits of these Information Constraints in Decentralized Stochastic Control and Decision-Making, with many intriguing hardness results that sharply distinguished single- and multi-agent decision-making. These classical results, however, mostly focused on the “Optimization Tractability” of the problem, when full model-knowledge is available. Hence, Zhang asked the natural and fundamental questions: can we better understand the role of such Information Constraints for Learning Efficiency, especially in terms of non-asymptotic Statistical and Computational Complexity guarantees, when the model is not (fully) known. This way, the insights from both Control and RL could be bridged, with the hope of inspiring and addressing new open questions in dynamic multi-agent learning.

As preliminary results, Zhang and his students have shown that, i) certain “information-sharing” patterns studied in Control Theory can benefit the efficient multi-agent learning efficiency in partially observable dynamic environments; ii) some new training scheme in modern empirical multi-agent RL, specifically, learning with “privileged information” during training, which can be viewed as an “asymmetric” information structure between the training phase and testing phase, has provable benefits in terms of sample and computational complexities.

Continuing the efforts, Zhang proposed to further investigate the “Learning Efficiency” (i.e., the sample and computational complexity) of multi-agent learning under other information structures, and also ground several new information structures that appeared in the empirical MARL literature. Moreover, Zhang also proposed to study the “emerging behaviors” of multiple independent learning agents with such Information Constraints. This would expand the classical “Learning-in-Games” perspective in Economics, one justification of “equilibrium” as the long-term “emerging behavior” of independent learning, “as the emergence of traffic network equilibrium from the local and independent agents’ self-interested behaviors”, Zhang said, to dynamic games with information constraints. The proposed research is highly interdisciplinary, accomplishing which requires fundamentals from Control Theory, Game Theory, Statistics, Theory of Computation, and Economics.

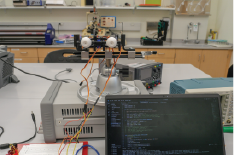

This award promotes Prof. Zhang’s long-term career goal of advancing the foundations of Large-Scale Autonomy, to address pressing societal and economic challenges. In particular, multi-agent autonomous systems are prevalent in socio-technical applications, such as transportation systems, power networks, robotics, and supply chains, which naturally operate under dynamic environments that are often-times suffered from the aforementioned Information Constraints. This award thus has great potential to significantly enhance these socio-technical systems with fundamental principles and new algorithms. Zhang will also work closely with practitioners and industrial partners from robotics, autonomous vehicles, logistics, and finance, to develop and evaluate on new testbeds, towards addressing real-world multi-agent autonomous systems.

Zhang joined UMD since the October of 2022, and his works on the intersection of Control Theory, Machine Learning, and Game Theory have been published in both top-tier Machine Learning conferences and prestigious Control Theory journals, and recognized by several awards before this CAREER Award, including the Simons-Berkeley Research Fellowship, CSL Thesis Award, ICML Outstanding Paper Award, and AAAI New Faculty Highlights.

Published January 22, 2025