News Story

Revolutionizing the Test

One of the biggest challenges facing instructors in the virtual classroom is testing integrity. How do faculty conduct a fair and honest assessment of a student’s knowledge when they are behind a computer screen in an uncontrolled environment?

This fall, the Clark School is launching a pilot of open-source software PrairieLearn, an online homework and exam system that allows customized student assessment for an asynchronous—and in this case, remote—environment. Developed by the University of Illinois in 2014, PrairieLearn allows instructors to generate customized testing for each student; no two tests are the same, cutting down on instances of academic dishonesty, particularly when students are not being assessed in a closely proctored testing environment. Because questions are randomized with each trial, students are not only allowed but encouraged to make multiple attempts, earning credit for each until they achieve mastery.

“It is very easy for students to get stuck on a problem, look up the answer, and simply move on,” says Kenneth Kiger, associate dean for undergraduate programs. “They might get the answer correct on the homework, but the risk is they never really take the time to understand it at a deeper level—and that hurts them when they take the exam. This program will offer the mechanisms for productive practice to gain confidence and a deeper understanding of the material.”

Led by Assistant Professor Mark Fuge and with the support of several faculty and administrators at the Clark School, the software is being piloted this fall in five large-enrollment undergraduate courses in the Department of Mechanical Engineering, with plans for a wider adoption schoolwide. While the program is uniquely beneficial in a remote setting, it will offer even more benefits when students return to campus; school administrators hope to stand up an on-campus testing center, providing students more flexibility in scheduling exams on their own time, when they’re ready.

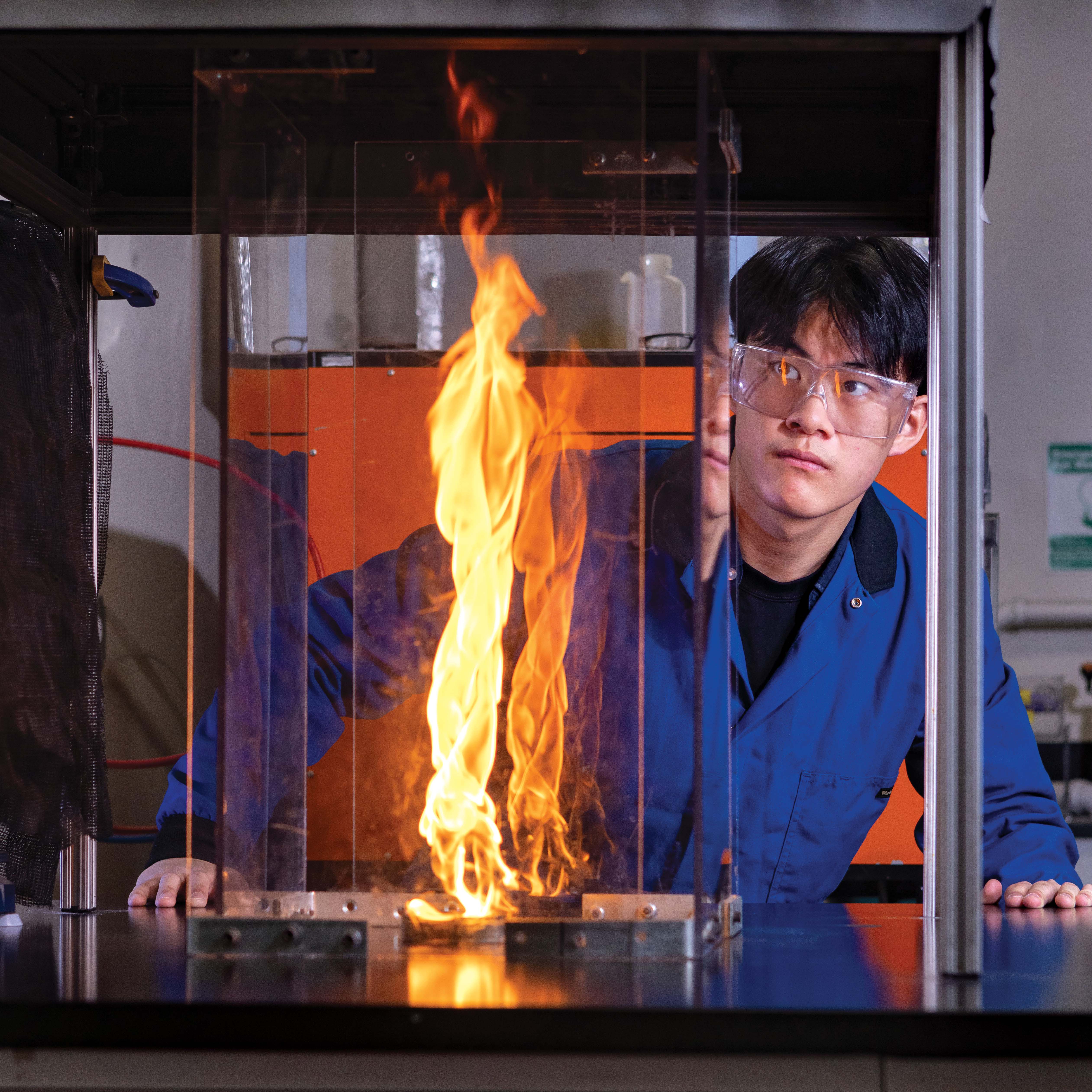

“I think for all courses, figuring out how to properly assess students is a challenge,” says Keystone Lecturer Cara Hamel (’15, M.S. ’16 fire protection engineering).

Hamel, who teaches ENES232, relies heavily on assessments to promote retention of the foundational yet complex subject of thermodynamics. But when the class moved online in the spring, she felt the assessment-heavy format was no longer serving the students in the way it was intended.

“Students not only had the stress of studying for a two-hour exam and taking it, but also the logistics of doing it online,” she says. Hamel points to home and life challenges, poor internet connectivity, and technical difficulties as obstacles she saw her students endure this past spring: “We wanted to come up with something that kept the learning outcomes, but was better for the students.”

With the help of the UMD Teaching and Learning Transformation Center, Hamel and colleagues broke down their assessments into smaller, more manageable tests and quizzes and integrated different methods for delivering the questions. Hamel’s group also opened up reference resources, allowing students to use their notes, textbooks, and, for the first time, a computational resource called the Engineering Equation Solver (EES), a tool that students can learn through low-stakes, asynchronous gamification training modules developed by Hamel and her team. While students historically haven’t been able to use computational tools like EES on exams, COVID-19’s push to computer testing has created if not necessitated the opportunity, which has been a longstanding aspiration of many Clark School faculty.

“We’re hoping this helps students not only have more success in homework and tests, but also gain some confidence in computational tools, which is important as they continue to progress in their degree,” says Hamel.

Published August 14, 2020